Autonomous robots use sound to "feel" objects.

2025-07-09 09:20:40 1419

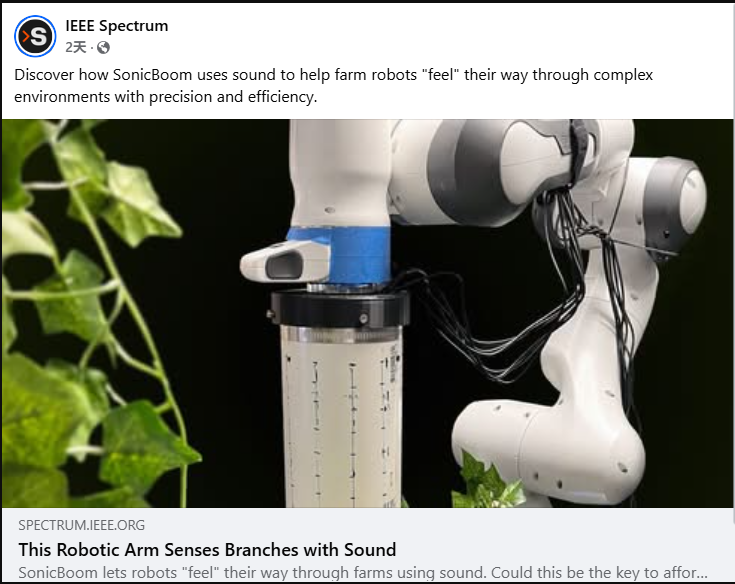

In a recent study, researchers developed a sensing system called SonicBoom that enables autonomous robots to perceive contacted objects using sound. This method can pinpoint or "feel" touched objects with centimeter-level precision. The related research was published in IEEE Robotics and Automation Letters.

In a recent study, researchers developed a sensing system called SonicBoom that enables autonomous robots to perceive contacted objects using sound. This method can pinpoint or "feel" touched objects with centimeter-level precision. The related research was published in IEEE Robotics and Automation Letters.

Current Sensing Methods

Many autonomous robots currently rely on an array of camera-based micro-tactile sensors. Miniature cameras beneath a protective gel layer allow these sensors to estimate tactile information by visually detecting gel deformation. However, this approach is not ideal for agricultural environments, where branches may obstruct visual sensors. Additionally, camera-based sensors are expensive and highly susceptible to damage in such settings.

Another option is pressure sensors, but these require covering a large portion of the robot's surface to effectively detect contact with objects like branches. Equipping an entire robotic arm with such sensors would be prohibitively costly.

How Does SonicBoom Work?

Unlike tactile or pressure sensors, SonicBoom employs a fundamentally different approach—relying on sound for perception. The system consists of contact microphones that detect physical contact as acoustic signals propagating through solid materials.

When a robotic arm touches a branch, the resulting sound waves travel along the arm until they reach the contact microphone array. Subtle differences in acoustic wave properties (e.g., signal strength and phase) between the microphones allow the system to triangulate the sound's origin, thereby determining the contact point.

This method allows microphones to be embedded deeper within the robotic arm, making them far less prone to damage compared to external visual sensors. The contact microphones can also easily withstand rough or abrasive interactions.

Moreover, the system uses a sparse array of microphones distributed across the arm, rather than the dense coverage required by visual or pressure sensors.

To improve SonicBoom's localization accuracy, researchers trained an AI model using data collected from over 18,000 strikes on the robotic arm with a wooden stick. As a result, SonicBoom could locate contact points on the arm with an error margin of just 0.43 cm for trained objects. It also detected new objects (e.g., plastic or aluminum) with an error of 2.22 cm.